This paper proposed a And-Or Graph network.

Intuition and Motivation

Unify and integrate building block. Build block is the basic component in popular network framework like Inception modules in GoogleNets and Skip-connections in ResNets.

To unify these building block, we need a good framework to exploit the compositionality, reconfigurability and lateral connectivity of these building modules. Here He use And-Or Grammar.

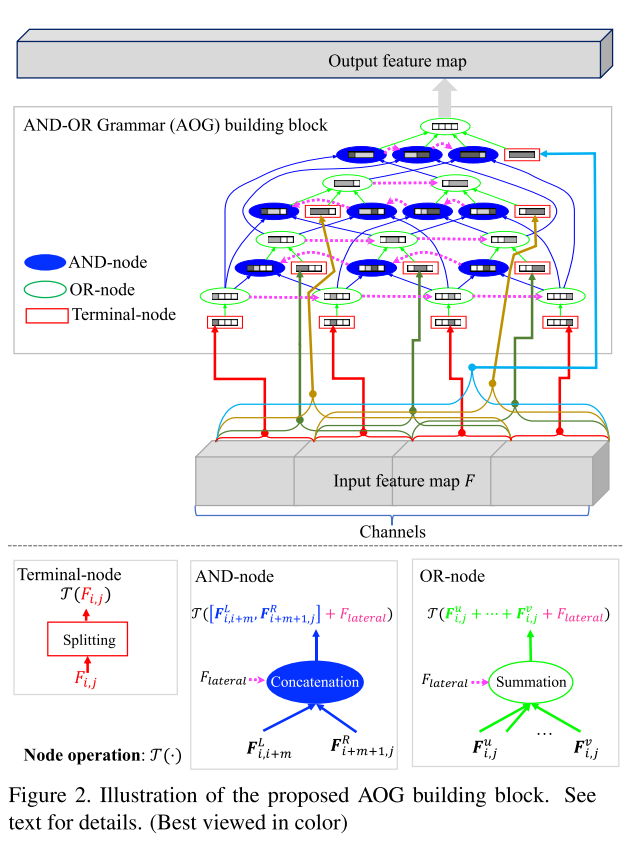

Model

- Terminal-nodes: group convolutions

- And-nodes: concatenation

- OR-nodes: summation

- The hierarchy facilitates: like Deep Pyramid ResNets, leads to good balance between depth and width of networks.

- The compositional structure provides much more flexible information flow

- The lateral connections increase the depth of nodes on the flow without introducing extra parameters.

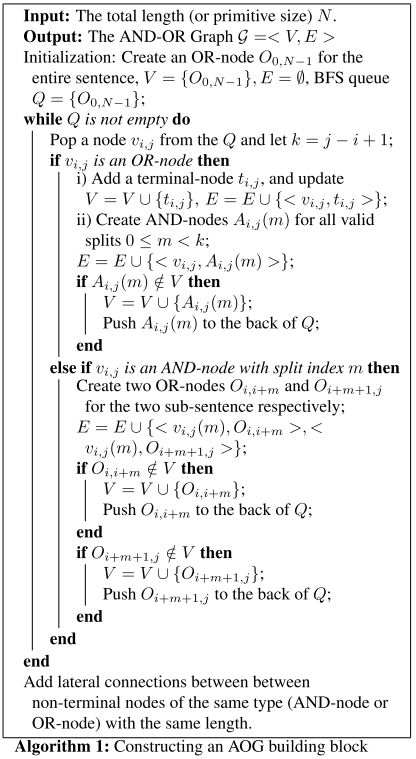

Building the framework

To build the and-or framework (building block), this paper implements a algorithm and pruning redundant node:

Think about it

Author

- Auto-AndOrNets: Neural architecture search (NAS) search the structure of AndOrNet.

- AndOrNets to AOGTransformer

- AndOrNets to AOGReasoner: combine AOGNets and GraphCNNs for common sense reasoning. That combine vision and language.

Me

I guess this work is just a new neural network that use the stochastic and-or grammar idea to “wrap” it. I guess it should be a flexible structure (adaptive network). We need a deformable and-or tree like Discriminatively trained and-or tree models for object detection. Can we use and-or grammar to solve parsing question? Different sentence has different structure. Maybe we can do reinforcement learning or unsupervising learning method to do grammar induction task.

Maybe related articles to my idea: