It is a interesting work that it learns text template during generation sentence from knowledge. Template is really useful since sentences are be written more based on templates than on words one by one. From another point of view, grammar can be seen as a summary of the template. We can learn how to do grammar induction from the template learning process.

Task

Generation a sentence based on an instance in knowledge graph.

There have been two mainly method on sentence generation.

1.Generating sentence based on template like:

2.Generating sentence based on a neural network based encoder decoder framework.

This work combine these two method by a neural network parameterized Hidden Semi-Markov Model (HSMM).

Hidden Semi-Markov Model

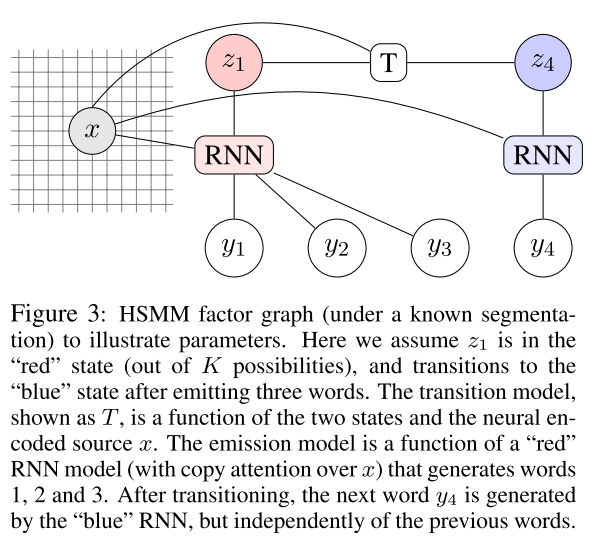

HSMM models latent segmentations in an output sequence. Informally, an HSMM is much like an HMM, except emissions may last multiple time-steps, and multi-step emissions need not be independent of each other conditioned on the state. A sketch map is showed as follow:

here $(x, y_{1:n})$ is instance of knowledge graph and its corresponding sentence and $z_i$ is different latent state.

Objective function is:

Parameterized

Encoder

Encoder is traditional neural network.

Decoder

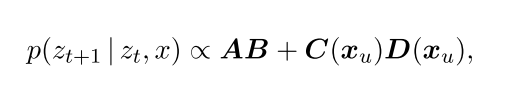

Transition Distribution

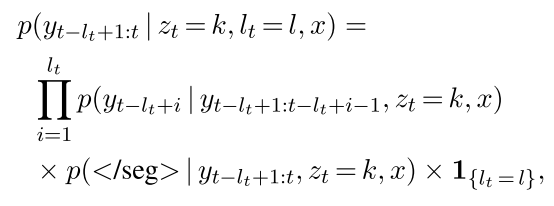

Emission Distribution

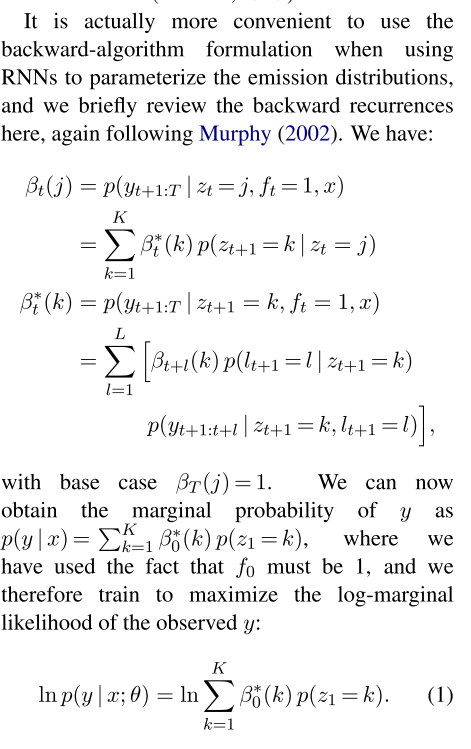

Learning

This model marginalize over these variables to maximize the log marginal-likelihood of the observed tokens y given x.

Discussion

There are a range of similar work such as Chong Wang’s work:

Sequence Modeling via Segmentations, ICML 2017

Towards Neural Phrase-based Machine Translation (code), ICLR 2018

Subgoal Discovery for Hierarchical Dialogue Policy Learning, EMNLP 2018

And Zhouhan Lin’s work: Recurrent-Recursive Network

It will be interesting if we can unsupervised learning template. For example, can we design a language model to learn templates such as previous work that unsupervised learns Chinese word segmentation. Or can we change the HSMM for learning discontinuous template like: “It ls … that ..”.